Calculating ROI and attributing value to an Out Of Home (OOH) advertising campaign, such as billboards or car wraps, requires billions of data points from mobile location data. One of the most challenging problems to overcome when working with mobile location data is developing accurate exposed and unexposed groups. Those challenges mean that it is not always possible to determine causality from the information that we have.

It also means that without proper care, you often end up with uplift well over 200%.

We're not kidding - we have actually seen uplift studies with numbers as high as 1000% from some of the largest attribution companies out there.

Companies have actually proposed that their campaign was so effective it increased visitation rates in the exposed group by 1000%.

Intuitively, this can not be correct. That would be the most effective campaign in the history of advertising. If the propensity to purchase is similar between exposed and unexposed groups, then there should be an eye-watering boost to sales. Imagine the case where you did a massive campaign of 200 cars and 200 billboards. You would “expose” the majority of a population, even in a big city like New York. Revenue would subsequently grow by hundreds of percent. It doesn’t, so there is an obvious catch here.

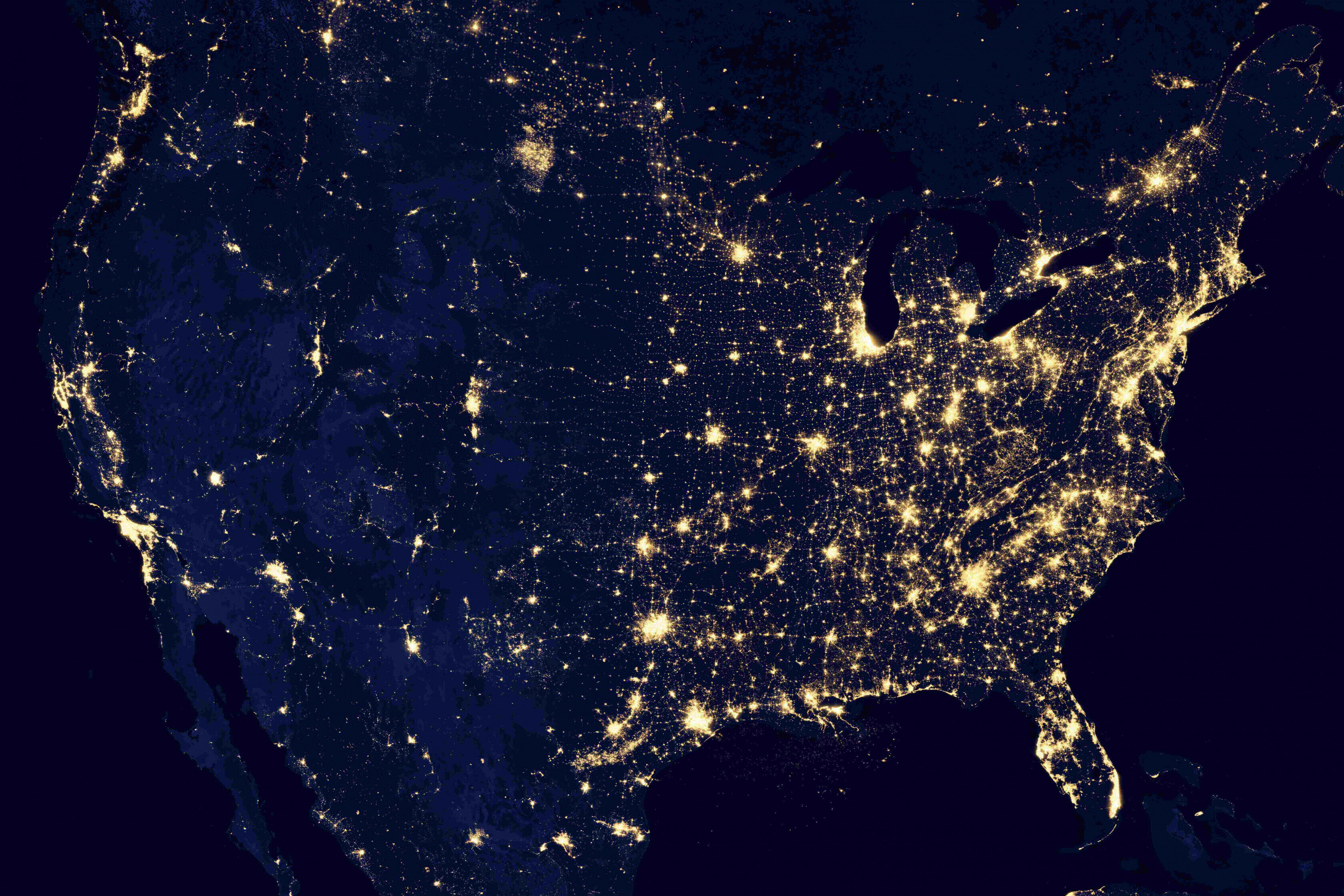

Case in point, the graphic below depicts the location and number of impressions for a recent 80 car - 4-week campaign in New York City. The number of impressions for this campaign is almost 25 million (compared to some 10 to 15 million potential unique impressions).

That means the average person was exposed almost 2 times. Sure some people didn’t get exposed, but in a week in NYC I see one of our cars at least a few times (I live and work there). Over a month it becomes impossible I don't see an ad.

How do I find an equivalent unexposed person to myself that has not seen the ad? The answer is that if the campaign is massive - few people have an unexposed equivalent.

Why This Happens

At mobilads, we have always made the decision to stay honest.

That means we don't exaggerate and we give brands honest feedback on the success of the campaign. We do this because we think that:

- Our campaigns are effective without exaggerating numbers

- We believe that by delivering an honest and reliable analysis, we can work with brands to optimize where and how we are advertising with them - adding even more value

We do not do ourselves or the brands we partner with any favors in providing flawed analysis, and it prevents the valuable step of optimization.

How do these numbers get so skewed? Our competitors are not lying, they have run the numbers without proper respect for the underlying data they work with. The problem has its roots in selection bias, and this is broken into two essential topics. Selection bias due to methodology and selection bias due to the data.

Selection Bias Due to Methodology

An experimental study starts with an exposed and unexposed group. The problem with OOH is that you can not randomly assign people to see an ad. You can try to “simulate” people who could have seen an advertisement due to obstruction or speed. In practice, this is wrong. Firstly, because GPS Data is inherently inaccurate and the idea that you can adequately judge obstacles and point of view is wrong. Second, and more importantly, this is simply not a random selection.

Since we can not randomly assign people to see an advertisement in the real world, we need to be careful about the conclusions that we draw.

This means when going the route of using exposed and unexposed groups, we can say that we observed that the visitation rate was 1000% (cough), but not that this was caused by the campaign. We may simply have been successful in advertising to the audience that is most likely to be your customers.

Selection Bias Due to Data

We've explained that the conclusions you can draw from an exposed/unexposed study in an environment where you cannot perform random selection are limited. But we have not explained why these studies end up with extraordinary numbers of uplift. This is the more misleading problem and is a bit more nuanced.

Mobile GPS data is very skewed. We might see one user 100 times a day and over 1,000 times a month and another user 100 times over an entire month. The fact that a user is seen rarely does not mean that they were not exposed when our model says that they were exposed. It just means we don’t always know where they are.

What happens in a situation where you are trying to match users in both space and time, and some users have more points than others? In other words, the completeness of information of some users is many times higher than others. The result is that you are more likely to expose users with a lot of data points. Because those users have a lot of data points, your completeness of information about that user is higher, and you are more likely to know that visited your store.

In the example below, we see two possible users - one with a higher frequency than the other. How likely is it that the “Low-Frequency User” didn’t see the ad?

More data - more complete information - more exposures and more visits (to anywhere).

When you perform an experimental people with a lot of data points are more likely to be exposed AND more likely to have recorded a visit to your store. This is simply due to the nature of the data. So when you do that study, of course, you get a crazy visitation rate. You probably ended up selecting a bunch of users with a lot of data.

How Do You Control For This?

It is not enough to match users based on behavior - you have to match users based on behavior AND completeness of the information. We created an internal metric called Completeness Of Information (COI). The higher the COI score, the more we know about a user. We can actually pair users up based on two factors - COI and user profiles. The former having already been discussed and the latter based on all the places that the user has visited. Now we can pair up users and say:

- We have a similar COI score, and;

- These users have similar profiles.

And so, they are a good match for observing differences.

When you do this, the numbers come down. A lot.

If you’re looking for big giant numbers in the hundreds of percent, you probably don’t want to go this route. But if you’re interested in the actual difference in visitation rate (or any other metric), then this is perfect. A well run designed campaign that is effective at reaching your target audience will almost always result in a higher visitation rate - as high as 200-300%. This depends on factors like the number of stores, market saturation, size and duration of the campaign, and type of store (EG - people buy groceries more than they buy phones). However, as we’ve mentioned, we can’t attribute this number to your campaign. At this point, all we know is that we have observed a difference in the exposed and unexposed groups that are statistically significant.

So we’ve got a realistic number but we still only have observed differences in groups, and as discussed above, we can not determine causality. Interested in how you can go about that? One way is to use Bayesian time-series models. We’ve got just such a case study that walks through the process in our post, Quantifying Business Impact: A Case Study in Causality.

Measure business impact by measuring the effect directly on your business. It sounds obvious, but - it's hard to implement. Luckily, we've done the hard work for you.

Jesse Moore - Chief Technology Officer - jesse@mobilads.co